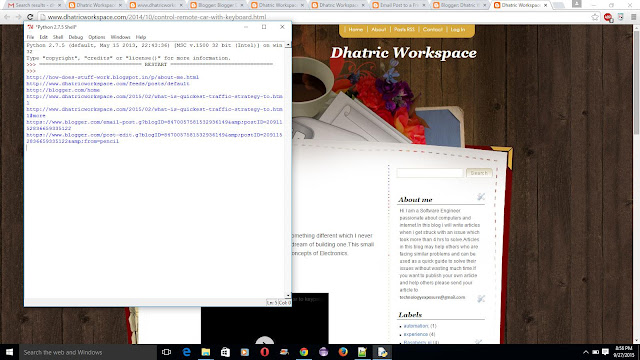

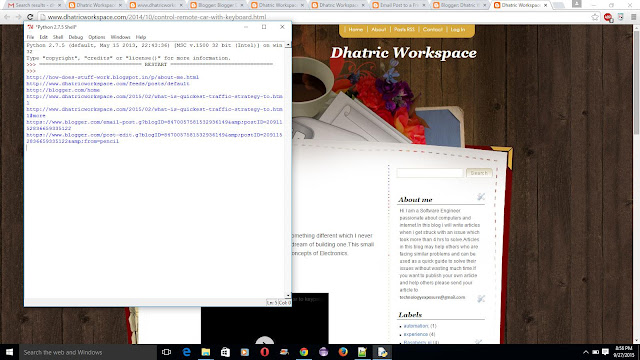

Python Script : Crawl a website ,take screenshots and save it in word doc.

Steps to execute this script

After execution of script ,it will automatically open the default browser and crawl all the pages it in and takes screenshot of all pages.

Once done with the screenshots it will create a doc file with all screenshots in it.

Steps to execute this script

- Create a folder 'link Checker' and place this script in it.

- Create a folder 'shots' and place it inside 'link Checker' folder.

- Change below variables

- url ="website url

" - SaveDirectory=r '

'screenshot directory" - Execute the program.

import requests

from BeautifulSoup import BeautifulSoup

import webbrowser

import os

import sys

import time

import Image

import ImageGrab

import win32com.client as win32

import os

import time

import glob

url = "http://www.dhatricworkspace.com"

response = requests.get(url)

# parse html

page = str(BeautifulSoup(response.content))

alllist = [];

httplist = [];

otherlist = [];

SaveDirectory=r'C:\Users\Giridhar\Desktop\link Checker\shots'

ImageEditorPath=r'C:\WINDOWS\system32\mspaint.exe'

def getURL(page):

"""

:param page: html of web page (here: Python home page)

:return: urls in that page

"""

start_link = page.find("a href")

if start_link == -1:

return None, 0

start_quote = page.find('"', start_link)

end_quote = page.find('"', start_quote + 1)

url = page[start_quote + 1: end_quote]

return url, end_quote

while True:

url, n = getURL(page)

page = page[n:]

if url:

#print url

alllist.append(url)

else:

break

#print alllist

for httpurl in alllist:

if httpurl.find("http")!=-1:

httplist.append(httpurl)

else:

if httpurl.endswith("/",0,1):

otherlist.append(httpurl)

#print httplist

#print otherlist

new = 0

i=1

for browsing in httplist:

webbrowser.get('windows-default').open(browsing,new=new)

time.sleep(10)

img=ImageGrab.grab()

print browsing

saveas=os.path.join(SaveDirectory,'ScreenShot_'+str(i)+'.jpg')

img.save(saveas)

i += 1

if i == 10:

break;

#---------------------------------code to move images to word-------

allpics = []

allpics=glob.glob(os.path.join(os.path.abspath("."),'shots/*.JPG'))

wordApp = win32.gencache.EnsureDispatch('Word.Application') #create a word application object

wordApp.Visible = False # hide the word application

doc = wordApp.Documents.Add() # create a new application

for pic in allpics:

current_pic = doc.InlineShapes.AddPicture(pic)

current_pic.Height= 400

current_pic.Width= 400

doc.SaveAs(os.getcwd()+'\\dhatricworkspace.docx')

wordApp.Application.Quit(-1)

After execution of script ,it will automatically open the default browser and crawl all the pages it in and takes screenshot of all pages.

Once done with the screenshots it will create a doc file with all screenshots in it.

Hello everyone, by the way, I didn't think I would see such an excellent and informative post. By the way, I was looking for buy research paper online here, and at the same time, I saw about Your own Broken Link Checker.

ReplyDeleteOf course, After the execution of the script, it will automatically open the default browser and crawl all the pages it is in, and takes a screenshot of all pages.

Elevate your academic performance with expert guidance! New Assignment Help UK offers personalized Dissertation Proposal Help in UK for Students, making the process stress-free and efficient. Don't miss this opportunity to stand out-contact us today and transform your ideas into success!

ReplyDeleteI found your article on youtube to mp3

ReplyDeletereally helpful. The simple, step-by-step explanation is perfect for people who aren’t tech-savvy. I also appreciate how you added safety advice for downloading. It’s great to have a reliable guide for those who love saving music or educational content offline.

As an MBA student juggling work and studies, I was drowning in assignments. Rapid Assignment Help's MBA Assignment Help really turned things around for me. Their expert writer understood my topic on strategic management and delivered a perfectly formatted, plagiarism-free paper ahead of schedule. The depth of research and clear analysis exceeded my expectations. The Assignment Help UK team kept constant communication, making me feel supported throughout. Thanks to this service, I scored one of my best grades. Honestly, this MBA Assignment Help made my life so much easier, and I'll continue using them for the rest of my MBA journey.

ReplyDeleteI appreciate your explanation of y2mate. Sometimes downloading or converting videos can feel complicated, but tools like this make the process simple and fast. It’s a good option for anyone who prefers offline access to videos or music.

ReplyDeleteI’ve seen bright students lose confidence because of a single tough assignment. That’s when Chemical Engineering Assignment Help becomes crucial. With trusted platforms like Native Assignment Help , students rediscover not just solutions, but belief in their own potential. And belief, after all, is where true learning begins

ReplyDelete